Originally designed for internal use, this Chinese language AI will be open sourced and shared publicly.

Multinational electronics contract manufacturing giant Foxconn has announced the launch of the first Traditional Chinese Large Language Model (LLM). Developed by its research and development arm, Hon Hai Research Institute (HHRI), the company says this LLM had a more efficient and lower-cost model training method, which was completed in four weeks.

The institute, which is headquartered in Tucheng, Taiwan, said the LLM—named FoxBrain—will be open sourced and shared publicly, but did not disclose a timeline.

It was originally designed for the company’s internal systems, covering functions such as data analysis, decision support, document collaboration, mathematics, reasoning, problem solving, and code generation.

The company says FoxBrain not only demonstrates powerful comprehension and reasoning capabilities but is also optimized for Taiwanese users’ language style, showing excellent performance in mathematical and logical reasoning tests.

“In recent months, the deepening of reasoning capabilities and the efficient use of GPUs have gradually become the mainstream development in the field of AI. Our FoxBrain model adopted a very efficient training strategy, focusing on optimizing the training process rather than blindly accumulating computing power,” said Dr. Yung-Hui Li, Director of the Artificial Intelligence Research Center at Hon Hai Research Institute. “Through carefully designed training methods and resource optimization, we have successfully built a local AI model with powerful reasoning capabilities.”

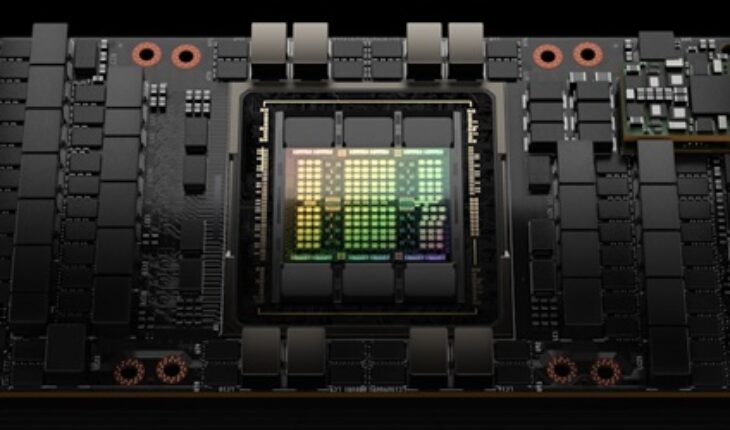

The FoxBrain training process was powered by 120 NVIDIA H100 GPUs, scaled with NVIDIA Quantum-2 InfiniBand networking, and finished in about four weeks. Compared with inference models recently launched in the market, the more efficient and lower-cost model training method sets a new milestone for the development of Taiwan’s AI technology.

FoxBrain is based on Meta Llama 3.1 architecture with 70B parameters. In most categories among TMMLU+ test dataset, it outperforms Llama-3-Taiwan-70B of the same scale, particularly exceling in mathematics and logical reasoning. Some technical specifications and training strategies for FoxBrain include:

- Established data augmentation methods and quality assessment for 24 topic categories through proprietary technology, generating 98B tokens of high-quality pre-training data for Traditional Chinese

- Context window length: 128 K tokens

- Utilized 120 NVIDIA H100 GPUs for training, with total computational cost of 2,688 GPU days

- Employed multi-node parallel training architecture to ensure high performance and stability

- Used a unique Adaptive Reasoning Reflection technique to train the model in autonomous reasoning

The company says FoxBrain showed comprehensive improvements in mathematics compared to the base Meta Llama 3.1 model. It achieved significant progress in mathematical tests compared to Taiwan Llama, currently the best Traditional Chinese large model, and surpassed Meta’s current models of the same class in mathematical reasoning ability. While there is still a slight gap with DeepSeek’s distillation model, Hon Hai says its performance is already very close to world-leading standards.

FoxBrain’s development—from data collection, cleaning and augmentation to Continual Pre-Training, Supervised Finetuning, RLAIF, and Adaptive Reasoning Reflection—was accomplished step by step through independent research, ultimately achieving benefits approaching world-class AI models despite limited computational resources.

Although FoxBrain was originally designed for internal group applications, in the future, Foxconn will continue to collaborate with technology partners to expand FoxBrain’s applications, share its open-source information, and promote AI in manufacturing, supply chain management, and intelligent decision-making.

NVIDIA provided support during training through the Taipei-1 Supercomputer and technical consultation, enabling Hon Hai Research Institute to successfully complete the model pre-training with NVIDIA NeMo. FoxBrain will also become an important engine to drive the upgrade of Foxconn’s three major platforms: Smart Manufacturing, Smart EV and Smart City.

The results of FoxBrain are scheduled to be shared publicly for the first time during NVIDIA GTC 2025 Session Talk on March 20.

Hon Hai Research Institute, the research and development arm of Foxconn, has five research centers. Each center has an average of 40 high technology R&D professionals focused on the research and development of new technologies, the strengthening of Foxconn’s technology and product innovation pipeline.