The eSP936 integrates with multi-modal VLMs, combining multiple visual sensors with real-time AI edge computing capabilities.

LAS VEGAS, NV and TAIPEI, Taiwan, Jan 3, 2025 – Etron Technology subsidiary eYs3D Microelectronics has launched the new eSP936 multi-sensor image controller IC. The eSP936 supports the synchronous processing of data from up to seven visual sensors, providing high image recognition accuracy. Paired with the Sense and React human-machine interaction developer interface, it enables intelligent control through human-machine interaction, becoming a key driver for the implementation of smart applications.

The eSP936 can be integrated with multi-modal visual language models (VLM), combining multiple visual sensors with real-time AI edge computing capabilities. It is suitable for smart application scenarios such as unmanned vehicles like automated guided vehicles, autonomous mobile robots, and drones. The eSP936 can process multiple 2D images at high speeds and generate 3D depth maps, enhancing precise environmental recognition. Embedded AI chips further enable dynamic navigation in complex environments. Additionally, industrial and service robots can achieve more precise intelligent perception in complex scenarios, combining real-time computing and automated operations for high-efficiency performance. In immersive human-machine interaction systems, the eSP936 can be integrated with AI SoC platforms to enhance the Sense and React interaction experience, widely applicable in Drones, USV (Unmanned Surface Vehicle), video conferencing, augmented education, and extended reality (XR) fields.

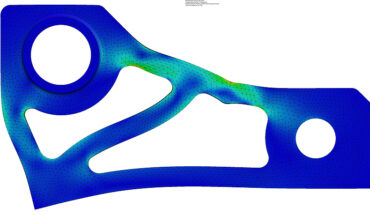

Key technology highlights of the eSP936: support for synchronized processing of data from up to seven visual sensors, built-in DRAM chip, and wide-angle image de-warping technology, enabling high-precision environmental perception and multi-view 3D depth map generation. It also features high-performance data compression capabilities to reduce latency, providing developers with a flexible and efficient platform. The MIPI+USB simultaneous processing technology ensures high-quality 2D image and 3D depth map output, improving image recognition accuracy.

Latest YX9170 Spatial Awareness Solution for Autonomous Vehicles

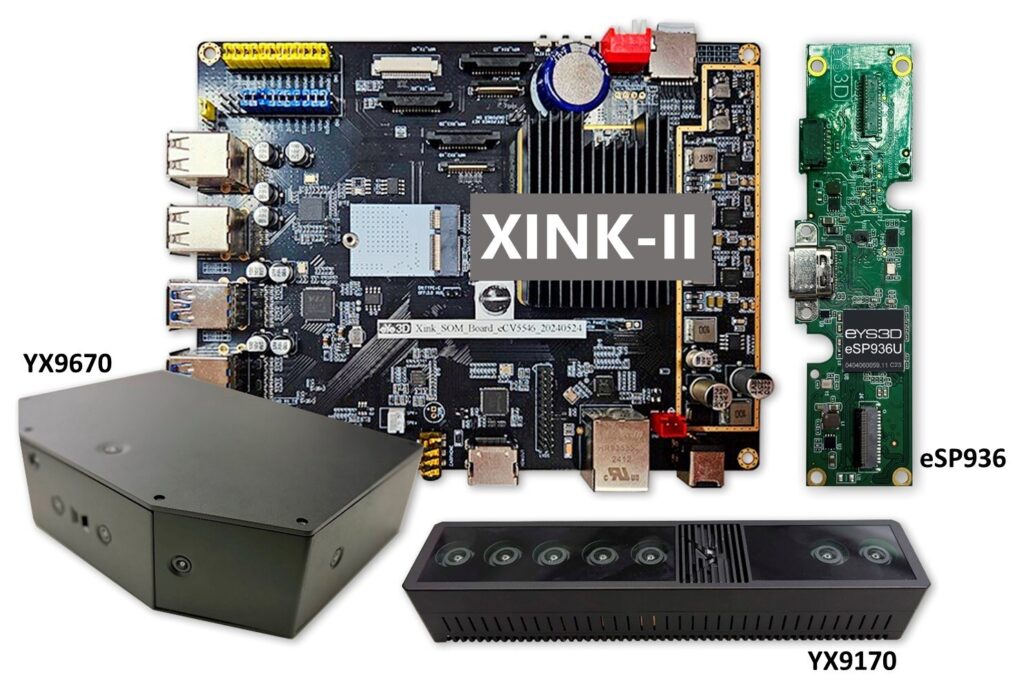

Debuting at CES 2025, eYs3D Microelectronics proudly unveils its cutting-edge spatial awareness solution, YX9170. Powered by the eYs3D multi-sensor image control chip eSP936 and the XINK-ll edge spatial computing platform, this solution leverages advanced multi-sensor fusion and AI-driven technologies to deliver breakthroughs in spatial perception and recognition. It provides robust support for the development of intelligent systems such as industrial and service robots and autonomous vehicles, emerging as a critical driver for smart system innovation.

The core strength of the YX9170 solution lies in its comprehensive sensor fusion capabilities. It integrates dual-depth sensors, supporting high-definition images up to 1280×720 resolution, and synchronizes four RGB cameras for enhanced perception range and recognition accuracy. With support for multiple stereo lens baseline integrations, it overcomes the limitations of traditional stereo vision measurement techniques. This innovation surpasses previous challenges in multi-camera image processing and computational architecture integration, forming a holistic AI-based spatial awareness system.

A highlight of the YX9170 solution is its intelligent sensing features. The embedded AI algorithms enable real-time multi-object recognition from synchronized images, achieving a 30% reduction in system computational load and latency. Through YX9170 and XINK-ll’s highly flexible and adaptable software solutions, customers can swiftly design tailored spatial awareness solutions with integrated multi-sensor fusion, showcasing eYs3D Microelectronics’ unparalleled technical prowess.

Latest YX9670 Navigation Solution for Autonomous Vehicles

eYs3D introduces its groundbreaking YX9670 navigation solution for autonomous vehicles. Equipped with the eYs3D multi-sensor image control chip eSP936 and the XINK-ll edge spatial computing platform, this innovative solution revolutionizes environmental awareness and navigation capabilities through advanced multi-sensor fusion and AI-driven technology.

The core advantage of the YX9670 solution lies in its comprehensive sensor fusion capabilities. It integrates a dual-depth sensor system supporting high-definition images up to 1280×720 resolution and synchronizes four RGB cameras to deliver a 278-degree panoramic field of view. Additionally, a monochrome camera provides a 145-degree overhead view, with an embedded high-efficiency AHRS (Attitude and Heading Reference System) for vessel coordination and posture recognition. The system also incorporates a thermal imaging sensor, creating a highly advanced perception system.

The YX9670 solution’s embedded AI algorithms enable real-time panoramic object recognition, navigation direction analysis, and multi-target tracking. Even in complex or harsh environments, the system maintains high detection and recognition efficiency, showcasing its exceptional technical capabilities.

Already successfully deployed in autonomous vessel navigation, the YX9670 solution provides safe and efficient navigation support for logistics vessels, environmental monitoring ships, and specialized unmanned marine applications. With its cutting-edge sensing technology and AI computational power, the solution not only meets current needs but also paves the way for a new era of intelligent navigation and human-machine interaction interfaces.

XINK-ll Edge Spatial Computing Platform and Sense and React Human-Machine Interaction Developer Toolkit

eYs3D Microelectronics will debut its XINK-ll Edge Spatial Computing Platform at CES 2025. This innovative “Platform as a Service (PaaS)” development solution is equipped with the eYs3D AI chip eCV5546, featuring ARM Cortex-A and Cortex-M CPU cores, along with an NPU (Neural Processing Unit). The platform supports the integration of AHRS, thermal imaging, and millimeter-wave radar sensors while incorporating AI convolutional neural network (CNN) technology to significantly enhance object recognition and detection capabilities for edge AI devices. Notably, ARM IoT Capital has provided not only funding but also exceptional performance enhancements for the XINK-ll platform. ARM CPUs with Neon instruction set support SIMD processing capabilities, accelerating vector and matrix operations for better computer vision and signal processing performance. Additionally, the low-power ARM Cortex-M4 processor serves as an MCU for system control, motor operation, and timeline synchronization. With a next-generation AI accelerator, the platform delivers superior performance compared to its peers, offering programmable development capabilities that supports various AI models. In the rapidly evolving AI landscape, XINK-ll is well-positioned to meet future computational demands. XINK-ll supports a wide range of AI development and acceleration frameworks, including ONNX, TensorFlow, TensorFlow Lite, PyTorch…etc., providing complete development kits and services to help developers more efficiently develop NPU (Neural Processing Unit) applications.

eYs3D Microelectronics also introduces the Sense and React human-machine interaction developer interface, which integrates LLM (large language models) and CNN sensing technology. This advanced technology leverages environmental sensing and uses LLM to trigger action commands and language prompts. It enables machines to issue commands or interact naturally with users through conversational language, marking a new era in human-machine interface technology.

Sense and React offers environmental awareness and cognition, action triggering with language prompts, and adaptability with flexibility. Through CNN sensing technology, the system efficiently perceives the surrounding environment, capturing subtle changes and dynamics for more accurate judgments and decisions. By employing LLM technology, the system generates action commands and language prompts based on sensor data, enhancing user convenience and delivering a more intelligent experience.

For more information about eYs3D Microelectronics, visit eys3d.com.

For more information about Etron Technology, visit etron.com.