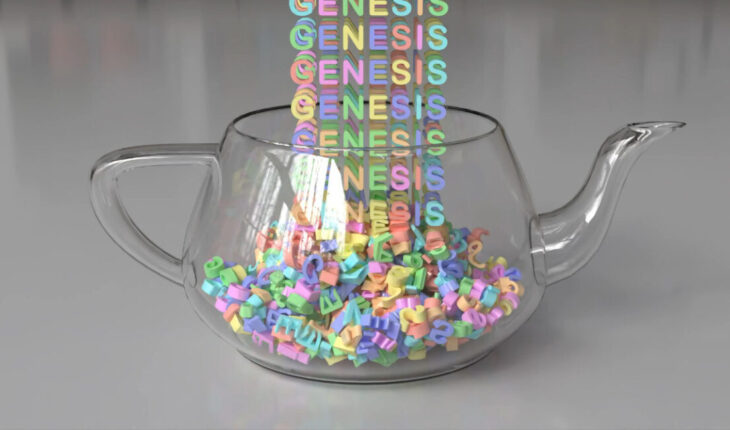

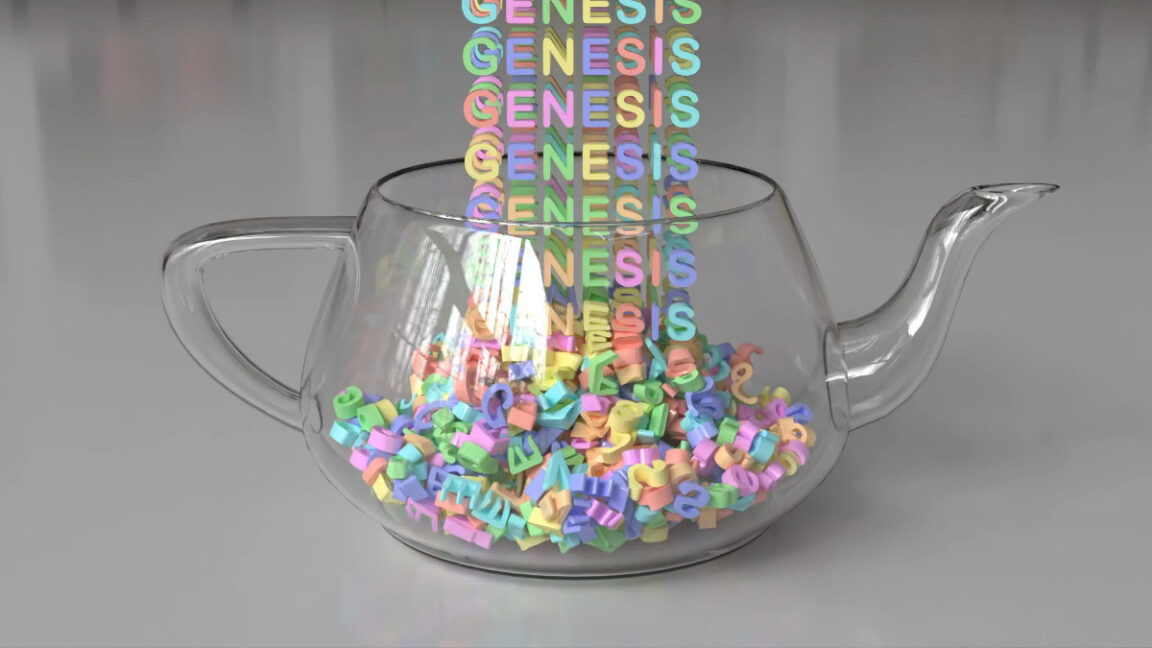

The AI-generated worlds reportedly include realistic physics, camera movements, and object behaviors, all from text commands. The system then creates physically accurate ray-traced videos and data that robots can use for training.

Examples of “4D dynamical and physical” worlds that Genesis created from text prompts.

This prompt-based system lets researchers create complex robot testing environments by typing natural language commands instead of programming them by hand. “Traditionally, simulators require a huge amount of manual effort from artists: 3D assets, textures, scene layouts, etc. But every component in the workflow can be automated,” wrote Fan.

Using its engine, Genesis can also generate character motion, interactive 3D scenes, facial animation, and more, which may allow for the creation of artistic assets for creative projects, but may also lead to more realistic AI-generated games and videos in the future, constructing a simulated world in data instead of operating on the statistical appearance of pixels as with a video synthesis diffusion model.

Examples of character motion generation from Genesis, using a prompt that includes, “A miniature Wukong holding a stick in his hand sprints across a table surface for 3 seconds, then jumps into the air, and swings his right arm downward during landing.”

While the generative system isn’t yet part of the currently available code on GitHub, the team plans to release it in the future.

Training tomorrow’s robots today (using Python)

Genesis remains under active development on GitHub, where the team accepts community contributions.

The platform stands out from other 3D world simulators for robotic training by using Python for both its user interface and core physics engine. Other engines use C++ or CUDA for their underlying calculations while wrapping them in Python APIs. Genesis takes a Python-first approach.

Notably, the non-proprietary nature of the Genesis platform makes high-speed robot training simulations available to any researcher for free through simple Python commands that work on regular computers with off-the-shelf hardware.

Previously, running robot simulations required complex programming and specialized hardware, says Fan in his post announcing Genesis, and that shouldn’t be the case. “Robotics should be a moonshot initiative owned by all of humanity,” he wrote.