By Karan Singh

It’s time for another dive into how Tesla intends to implement FSD. Once again, a shout out to SETI Park over on X for their excellent coverage of Tesla’s patents.

This time, it’s about how Tesla is building a “universal translator” for AI, allowing its FSD or other neural networks to adapt seamlessly to different hardware platforms.

That translating layer can allow a complex neural net—like FSD—to run on pretty much any platform that meets its minimum requirements. This will drastically help reduce training time, adapt to platform-specific constraints, decide faster, and learn faster.

We’ll break down the key points of the patents and make them as understandable as possible. This new patent is likely how Tesla will implement FSD on non-Tesla vehicles, Optimus, and other devices.

Decision Making

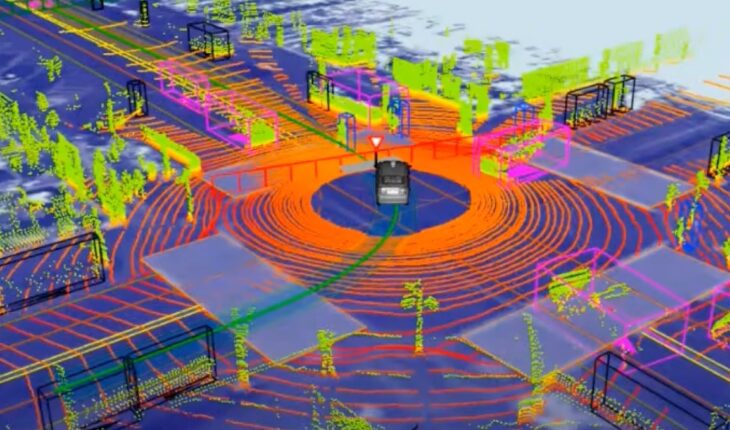

Imagine a neural network as a decision-making machine. But building one also requires making a series of decisions about its structure and data processing methods. Think of it like choosing the right ingredients and cooking techniques for a complex recipe. These choices, called “decision points,” play a crucial role in how well the neural network performs on a given hardware platform.

To make these decisions automatically, Tesla has developed a system that acts like a “run-while-training” neural net. This ingenious system analyzes the hardware’s capabilities and adapts the neural network on the fly, ensuring optimal performance regardless of the platform.

Constraints

Every hardware platform has its limitations – processing power, memory capacity, supported instructions, and so on. These limitations act as “constraints” that dictate how the neural network can be configured. Think of it like trying to bake a cake in a kitchen with a small oven and limited counter space. You need to adjust your recipe and techniques to fit the constraints of your kitchen or tools.

Tesla’s system automatically identifies these constraints, ensuring the neural network can operate within the boundaries of the hardware. This means FSD could potentially be transferred from one vehicle to another and adapt quickly to the new environment.

Let’s break down some of the key decision points and constraints involved:

-

Data Layout: Neural networks process vast amounts of data. How this data is organized in memory (the “data layout”) significantly impacts performance. Different hardware platforms may favor different layouts. For example, some might be more efficient with data organized in the NCHW format (batch, channels, height, width), while others might prefer NHWC (batch, height, width, channels). Tesla’s system automatically selects the optimal layout for the target hardware.

-

Algorithm Selection: Many algorithms can be used for operations within a neural network, such as convolution, which is essential for image processing. Some algorithms, like the Winograd convolution, are faster but may require specific hardware support. Others, like Fast Fourier Transform (FFT) convolution, are more versatile but might be slower. Tesla’s system intelligently chooses the best algorithm based on the hardware’s capabilities.

-

Hardware Acceleration: Modern hardware often includes specialized processors designed to accelerate neural network operations. These include Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs). Tesla’s system identifies and utilizes these accelerators, maximizing performance on the given platform.

Satisfiability

To find the best configuration for a given platform, Tesla employs a “satisfiability solver.” This powerful tool, specifically a Satisfiability Modulo Theories (SMT) solver, acts like a sophisticated puzzle-solving engine. It takes the neural network’s requirements and the hardware’s limitations, expressed as logical formulas, and searches for a solution that satisfies all constraints. Try thinking of it as putting the puzzle pieces together after the borders (constraints) have been established.

Here’s how it works, step-by-step:

-

Define the Problem: The system translates the neural network’s needs and the hardware’s constraints into a set of logical statements. For example, “the data layout must be NHWC” or “the convolution algorithm must be supported by the GPU.”

-

Search for Solutions: The SMT solver explores the vast space of possible configurations, using logical deduction to eliminate invalid options. It systematically tries different combinations of settings, like adjusting the data layout, selecting algorithms, and enabling hardware acceleration.

-

Find Valid Configurations: The solver identifies configurations that satisfy all the constraints. These are potential solutions to the “puzzle” of running the neural network efficiently on the given hardware.

Optimization

Finding a working configuration is one thing, but finding the best configuration is the real challenge. This involves optimizing for various performance metrics, such as:

-

Inference Speed: How quickly the network processes data and makes decisions. This is crucial for real-time applications like FSD.

-

Power Consumption: The amount of energy used by the network. Optimizing power consumption is essential for extending battery life in electric vehicles and robots.

-

Memory Usage: The amount of memory required to store the network and its data. Minimizing memory usage is especially important for resource-constrained devices.

-

Accuracy: Ensuring the network maintains or improves its accuracy on the new platform is paramount for safety and reliability.

Tesla’s system evaluates candidate configurations based on these metrics, selecting the one that delivers the best overall performance.

Translation Layer vs Satisfiability Solver

It’s important to distinguish between the “translation layer” and the satisfiability solver. The translation layer is the overarching system that manages the entire adaptation process. It includes components that analyze the hardware, define the constraints, and invoke the SMT solver. The solver is a specific tool used by the translation layer to find valid configurations. Think of the translation layer as the conductor of an orchestra and the SMT solver as one of the instruments playing a crucial role in the symphony of AI adaptation.

Simple Terms

Imagine you have a complex recipe (the neural network) and want to cook it in different kitchens (hardware platforms). Some kitchens have a gas stove, others electric; some have a large oven, others a small one. Tesla’s system acts like a master chef, adjusting the recipe and techniques to work best in each kitchen, ensuring a delicious meal (efficient AI) no matter the cooking environment.

What Does This Mean?

Now, let’s wrap this all up and put it into context—what does it mean for Tesla? There’s quite a lot, in fact. It means that Tesla is building a translation layer that will be able to adapt FSD for any platform, as long as it meets the minimum constraints.

That means Tesla will be able to rapidly accelerate the deployment of FSD on new platforms while also finding the ideal configurations to maximize both decision-making speed and power efficiency across that range of platforms.

Putting it all together, Tesla is preparing to license FSD, Which is an exciting future. And not just on vehicles – remember that Tesla’s humanoid robot – Optimus – also runs on FSD. FSD itself may be an extremely adaptable vision-based AI.

By Karan Singh

Tesla recently rolled out power efficiency improvements to its Sentry Mode feature for the Cybertruck with software update 2024.38.4. These updates drastically improve the vehicle’s power consumption when Sentry Mode is active.

We’ve now uncovered more details on how Tesla achieved such drastic improvements in the vehicle’s power consumption, which Tesla estimated to be a 40% reduction.

Tesla made architectural changes to how it processes and analyzes video — optimizing which components handle which tasks. While the Cybertruck is the first to benefit from these advancements, Tesla plans to extend these upgrades to other vehicles in the future.

Sentry Mode Power Consumption

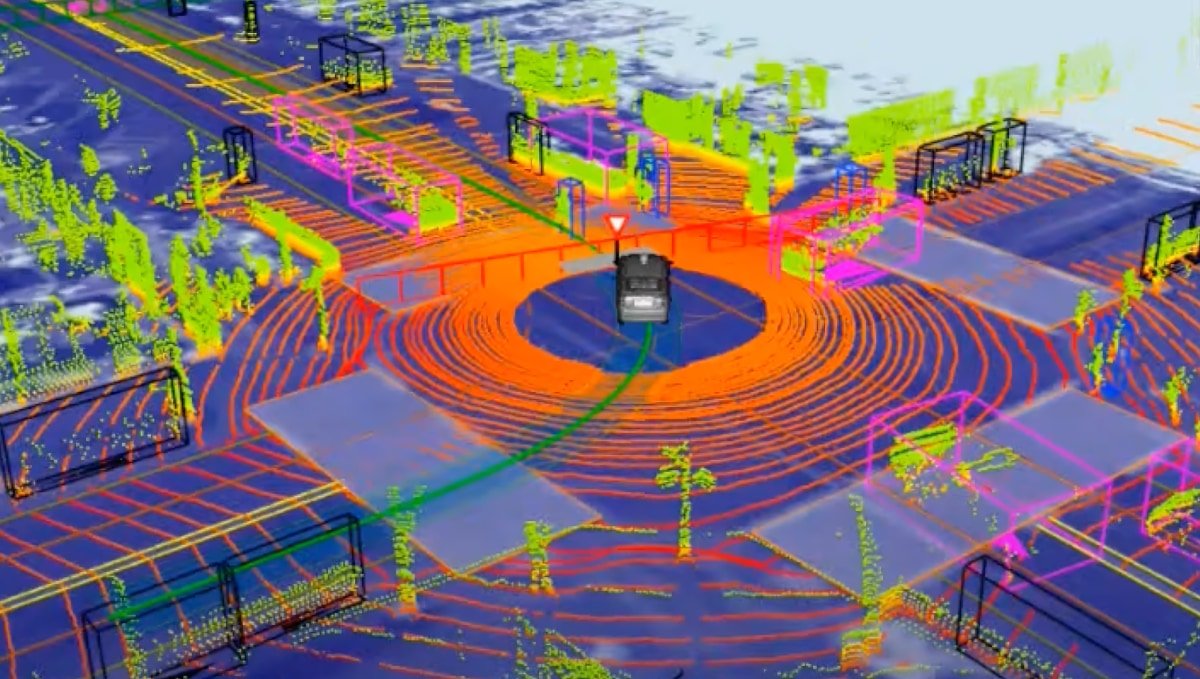

Tesla vehicles feature two main computers: the MCU (Media Control Unit) computer, which powers the vehicle’s infotainment center, and the FSD computer, which is responsible for Autopilot and FSD. Both of these computers remain on and powered any time the vehicle is awake, consuming about 250-300 watts.

Typically, the vehicle only uses this power while it’s awake or actively driving. It’s not a major concern since the car automatically goes to sleep and shuts down its computers after about 15 minutes of inactivity. However, the larger issue is that these computers also need to remain on when Sentry Mode is active, causing a 250-watt draw whenever Sentry Mode is on.

Interconnected System

Today, the vehicle’s cameras are connected to the FSD computer, which connects to the MCU, which is finally connected to the USB ports. Because of this interconnected setup, everything needs to remain powered. Footage needs to be streamed from the FSD computer into the MCU, where processes like motion detection occur. The data then needs to be compressed before finally being written to the USB drive. That’s a lengthy process, requiring multiple computers to remain on in order to be able to record and save live video.

Architectural Changes

Tesla is making some architectural changes to address Sentry Mode’s high power consumption by shifting the responsibilities of the vehicle’s computers. By shifting motion detection and possibly the compression activity to the FSD computer, Tesla will now be able to keep the MCU computer asleep. The MCU is still required to push the video to the USB drive, but Tesla can now wake up the system only when it’s needed.

For instance, the FSD computer will still handle the connection to the vehicle’s cameras, but it will now also detect motion. When that Sentry event occurs, it can wake up the MCU to write the data to the USB drive and then have it go back to sleep.

This approach ensures the MCU isn’t continuously powered to analyze and compress video, instead activating it only when data needs to be written.

Processor Isolation & Task Allocation

Tesla’s current architecture separates the Autopilot Unit (APU) from the MCU. This is done for several reasons – but first and foremost is safety. The MCU can be independently restarted even mid-drive without impacting the APU and key safety features.

Additionally, by isolating the APU from the MCU, tasks that are optimized for each unit—processing versus image transcoding—can be offloaded to the processing unit that’s better suited for it. This helps keep both the APU and MCU operating at their optimal power and performance parameters, helping to manage energy consumption more efficiently.

Kernel-Level Power Management

Tesla’s been working on more than just FSD or new vehicle visualization changes and has been putting in the effort to optimize the operating system’s underlying kernel. While not in heavy use, Tesla is underclocking the processors of both the MCU and APU, reducing power usage and heat generation.

Of course, other kernel optimizations and programming tricks, such as the ones Tesla uses to optimize its FSD models, also factor into the increased overall efficiency of the vehicles.

Additional Benefits

Since Tesla vehicles also include a Dashcam that processes video, it’s possible we may also see these additional power savings whenever the vehicle is awake. This could also affect other features, such as Tesla’s Summon Standby feature, which keeps the vehicle awake and processing video to give users almost instant access to the vehicle’s Summon feature.

Roll Out to Other Vehicles

While the Cybertruck was the only vehicle to receive these power improvements to Sentry Mode, we were told that they’re coming to other vehicles too. Tesla is introducing these changes with the Cybertruck first, leveraging its smaller user base for initial testing before expanding the rollout to other vehicles.

USB Port Power Management

To further conserve energy and reduce waste, Tesla now powers down USB ports, even if Sentry Mode is active. This change has impacted many users who rely on 12v sockets or USB ports to remain powered to keep accessories such as small vehicle refrigerators on.

It’s not immediately clear whether these changes to Sentry Mode impact this change or whether power to 12v outlets was removed strictly due to safety concerns.

By Karan Singh

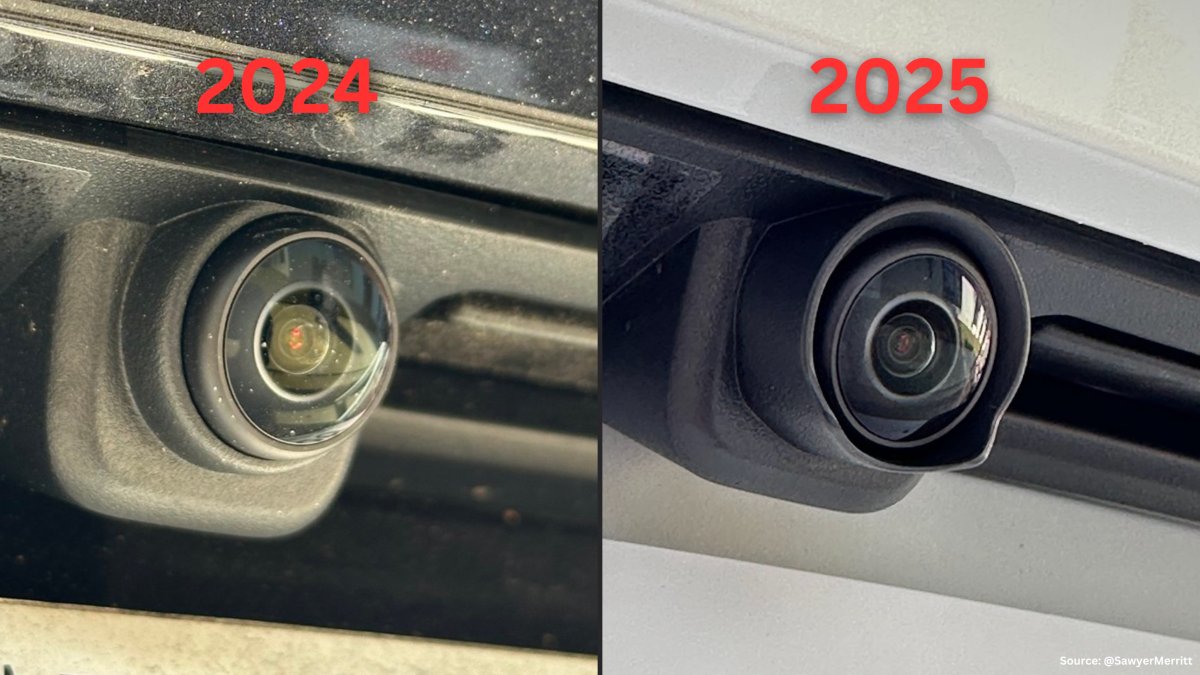

Tesla has initiated another minor design revision, this time improving the rear camera on the Model 3 and Model Y. This minor revision adds a protective lip around the camera, providing better shielding against rain, dirt, snow, and general road grime.

The design revision began rolling out for Model Y vehicles from Shanghai first, with the initial vehicles spotted with the revision dated as early as late September 2024. Tesla regularly makes minor design revisions on its vehicles in between model years, in an iterative design process that gradually improves as more and more vehicles are built.

Design Revision

The design revision adds a small shield around the rear camera, including a small lip towards the bottom end. The little lip is likely going to make the biggest difference, as it will help prevent kickback and wash from the tires landing on the camera lens, which can obscure it.

For now, nothing indicates a potential revision including a camera washer—similar to the Cybertruck’s front camera washer. However, given we already know the Model Y Juniper is likely arriving with a front camera, it’ll probably also have a front camera washer.

This lip for the rear camera should be a nice addition, but we’ll have to see just how much of an improvement it provides in the upcoming winter season as the messy, slushy mix arrives in much of the United States and Canada.

3D Printed Accessory

If you’re feeling left out without the new rear camera shield, you’ll soon be able to 3D print and install a similar design. Some entrepreneurial 3D modelers have already started working on making a retrofittable shield for both the HW3 and HW4 rear cameras.

In the meantime, we recommend using ceramic coating on the rear camera to help keep that slush and grime moving when it does hit the camera. A good application of ceramic coating can help prevent buildup on the lens.

Model S and X

For now, we haven’t seen this design revision on more recent Model S and Model X vehicles yet. There were previously rumors of a light refresh for both of the more premium vehicles. However, we haven’t seen any indications of these changes actually seeing the light of day.

Once the refreshes for these two vehicles arrive, we could see more drastic changes. Tesla has also indicated it is waiting to use some of its upcoming new battery cell technology in 2026, so we could be waiting for a while before seeing further updates to the Model S and Model X.

We’ll be looking for both vehicles to receive this design revision. If you spot them, let us know on social media or on our forums.