Simulation is critical to successful engineering, and it’s becoming even more so thanks to trends such as simulation-driven design, digital twins and AI simulation.

Knowing how to use simulation software is one piece of the puzzle. Engineering teams must also learn to incorporate simulation into design and development workflows to use its vast capabilities effectively.

Traditionally, simulation was used primarily as a validation tool in later stages of product development to verify that a product would meet performance and safety requirements. This approach minimized the risk of costly redesigns and failures post-manufacturing. However, placing simulation at the end of the design process limits its impact on early-stage innovation and conceptualization.

Additionally, well before today’s advanced software and computing technologies, simulation studies had limited computational power, making high-fidelity simulations time-consuming and less feasible, especially for complex systems. High costs and the need for specialized expertise restricted their use to larger organizations with significant resources. Consequently, specialized teams often conducted simulations in isolation, leading to less integration with the overall design and development process.

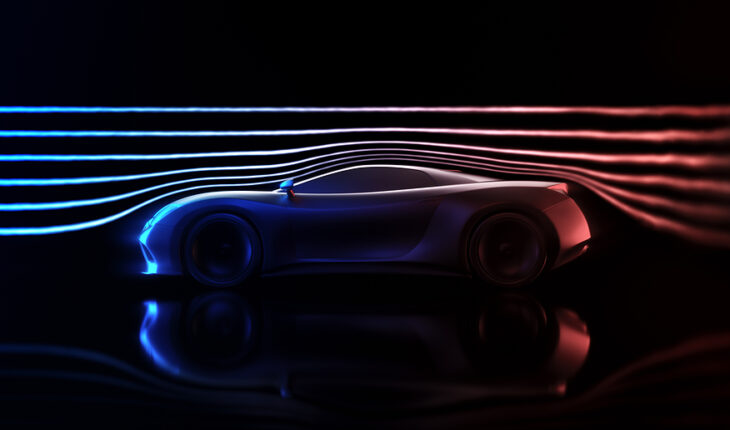

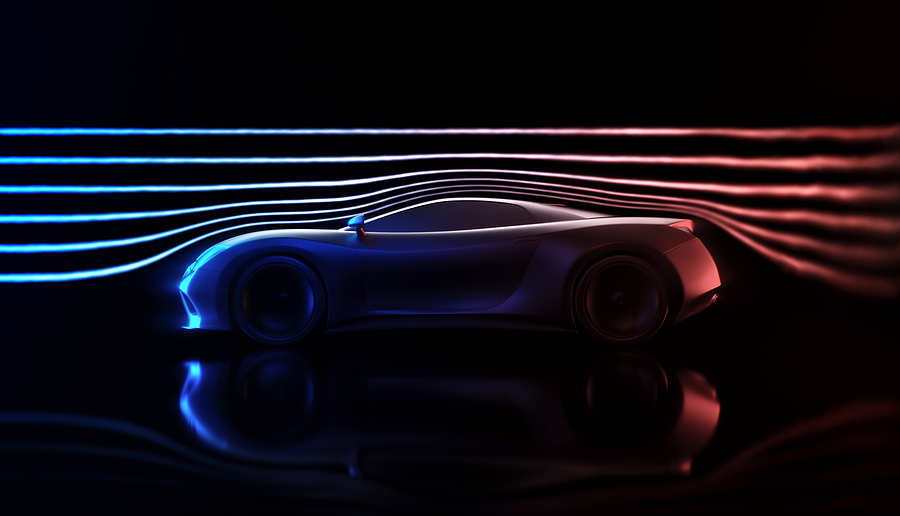

Modern practices integrate simulation early in the design phase, allowing for rapid prototyping and iterative improvements. Advances in computational power and software capabilities enable automated optimization that reduces iteration time and effort. Today’s software can also handle multiphysics problems, integrating tightly coupled physical phenomena for a more comprehensive analysis. Plus, as simulation tools become more user-friendly, more organizations can adopt modern simulation-driven design approaches.

Simulation-driven design moves simulation from the later stages of product development cycles toward the beginning and throughout to inform design decisions. This can accelerate the design phase by allowing for rapid iteration and testing in a virtual environment before producing physical prototypes. It also enables engineers to explore innovative and unconventional designs and materials that may be too risky or expensive to test physically. Integrating simulation with design also helps engineers identify flaws and failures earlier, reducing the risk of costly recalls and redesigns after product launch.

Though this approach makes sense conceptually, implementing it can be challenging. Teams accustomed to more traditional, linear design cycles, where models and files are exchanged between design and simulation engineers, must adopt new collaborative ways of working. Much like moving from a waterfall to an agile approach, teams must transform their culture, not just their technologies or processes.

Some software providers have made simulation-driven design adoption easier by integrating CAD and CAE capabilities into one platform. They also offer cloud-based services to support asynchronous design cycles and disparate teams. Plus, such platforms are becoming more accessible so that designers and engineers of varying skills can leverage the software with less technical experience. This is often referred to as the democratization of simulation, a paradigm which opens CAE capabilities to novices and individuals in various fields. However, anyone using simulation software still needs a fundamental understanding of the problems being solved and the ability to assess the results for feasibility.

Nonetheless, with integrated CAE platforms and a simulation-driven design approach, teams can accelerate designs, improve quality and manufacturability and make physical prototyping and final testing more efficient and cost-effective.

The terms “simulation” and “digital twin” are sometimes used interchangeably, yet they refer to different technologies and are used for different purposes. Distinctions among the terms and technologies are up for debate and will likely continue blurring over time.

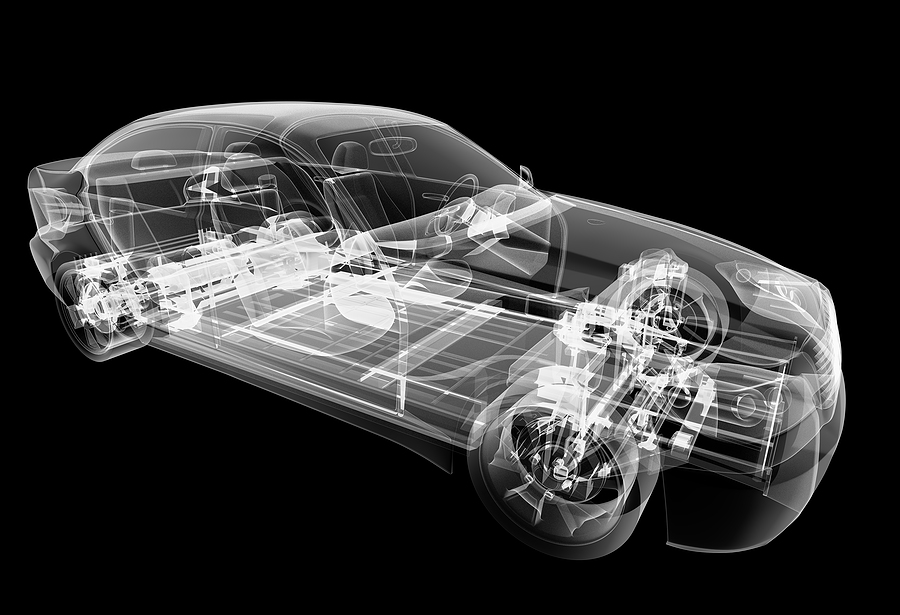

Engineers often use simulation software to mathematically model and test designs before manufacturing and to understand post-production design failures. In contrast, digital twins are virtual models that replicate the status, operation and condition of a real-world asset, such as a production-line robot or compressed air system. This requires sensors and transmitters on the physical asset to send real-time data to the software.

Though different in function, simulation and digital twins can intersect to improve products and systems.

For example, engineers may create a digital twin of a real-world machine and then test it under certain conditions. With data continuously and accurately sent to the software, engineers can simulate how changes will affect the digital twin before adjusting settings or replacing components on the real-world machine.

From a data perspective, digital twins ideally have two-way communication with the physical assets they represent, while simulation typically only receives information. Also, digital twins continuously integrate real-time data, while simulation uses static data for model analysis. However, simulations can run parallel with digital twin data feeds to predict future states, optimize maintenance schedules, identify potential issues and suggest improvements.

Every industry is exploring how artificial intelligence (AI) can improve technology and processes. From machine learning (ML) algorithms to large language models (LLMs), such as ChatGPT, capabilities abound to reduce costs and increase efficiency and quality.

In the simulation world, AI could be a gamechanger. It can automate tasks and streamline workflows so designers and engineers can focus on higher-value work that only humans can do. It also opens doors for non-experts to create designs and approximations with less technical skills.

For instance, AI algorithms can optimize computational processes and reduce run times. Simulation techniques such as reduced-order modeling (ROM) use AI to simplify complex models and speed up solving without compromising accuracy. ML algorithms can also improve validation by continuously learning from simulation results to detect errors and anomalies.

Some software providers are exploring a bottom-up approach to develop physics-based AI models that bypass the mathematical equations underpinning current solvers. Such software could analyze a CAD model’s behavior under loading conditions in a fraction of the time compared to traditional solvers. Simulation studies could be 100 times faster due to the AI algorithms alone and another 10 times faster using GPUs.

While a bottom-up approach attempts to create a general-purpose simulation AI trained on physics, top-down AI targets specific problems based on narrow training data. A top-down approach can be applied to any simulation problem, but as soon as the problem is tweaked, the simulation breaks down, and the AI must be retrained. Though much more limited than bottom-up simulation, it is also easier to develop, which is why many simulation companies have already begun commercializing it.

Of course, there are caveats. AI’s effectiveness relies on data quality and availability. Poor data can lead to inaccurate models and predictions. Also, AI often requires significant computing resources, especially for training complex models, which can seem more like a problem shift than a solution. Regardless, many engineers look forward to incorporating more AI capabilities into simulation software to solve bigger problems faster and more accurately.