British-Canadian Geoffrey Hinton, known as the “Godfather of Artificial Intelligence,” pioneered deep learning and artificial neural networks. This year, he was awarded the Nobel Prize in Physics for his contributions to artificial intelligence. However, he recently said there is a 10 to 20 percent chance that artificial intelligence (AI) will cause human extinction within the next 30 years, higher than the 10 percent he predicted earlier.

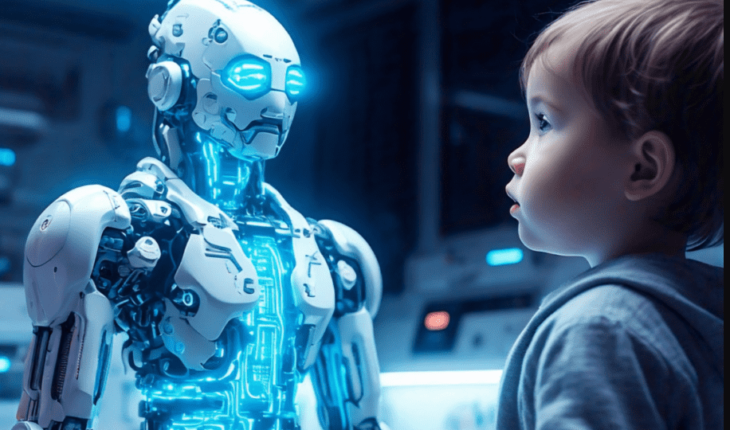

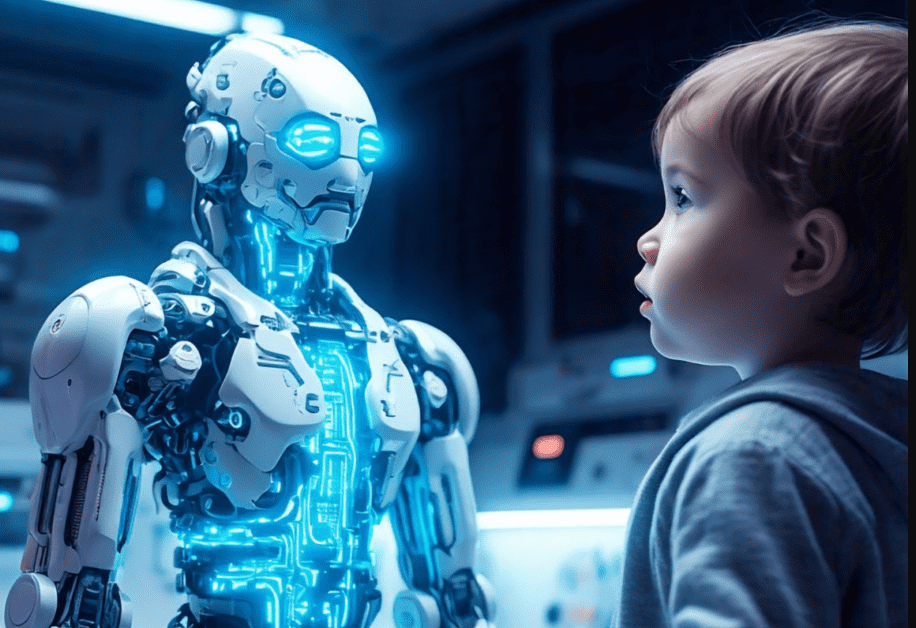

Humans are like three-year-olds in front of AI.

According to a recent Theguardian post and in an interview with BBC Radio 4’s Today program, Hinton said that the rapid development of AI had created unprecedented challenges for humanity. “We never had to deal with something smarter than ourselves. How many examples can you give of things that are less intelligent but controlling more intelligent things? Hardly. The only exceptions may be mothers and babies, but this has been achieved over millions of years of evolution.”

In the interview, Hinton described humans as being like a three-year-old compared to advanced AI systems, saying, “Think about yourself and a three-year-old; in the future, we will be three-year-olds in front of advanced highly intelligent systems.” He argues that this asymmetry of intelligence is likely to lead to the loss of control over AI.

A pioneer in neural network research, Hinton worked for Google for many years until he decided to quit in 2023 to focus on the risks of AI. He warned that AI systems may surpass human intelligence in the next two decades, and the challenges and crises they bring will be unpredictable. He believes that the safe development of AI cannot be guaranteed by corporate profit orientation alone. He calls for governments worldwide to work together to develop strict regulatory mechanisms to avoid potential disasters.

Hinton’s core point is that AI capabilities are not just technological breakthroughs but can potentially change power structures and even affect the entire social order. He admits that he is both in awe and apprehensive about the potential of this technology and bluntly states that “this is one of the most disruptive innovations in human history.”

At the same time, Yann LeCun, the chief AI scientist at Mark Zuckerberg’s Meta, another critical figure in the field of AI, has a very different attitude toward the future of AI. He believes that if properly utilized, AI can solve global challenges such as energy shortages and unequal distribution of medical resources. LeCun stressed that the threat of exaggerating AI could overshadow its potential benefits and argued that the “end-of-the-world” narrative is biased.

LeCun argues that AI can not only improve efficiency but could also be a “savior” for solving the planet’s problems. He believes that as long as humans drive technology responsibly, the benefits of AI far outweigh the risks.

Where does the future hold for AI?

The disagreement among people about AI’s future shows the polarization of the AI technology controversy: on the one hand, there are deep concerns about the technology getting out of control and optimistic expectations about its potential. Against the backdrop of rapid technological advancements, there is a heated debate about AI’s impact, and regulatory issues are also in the spotlight.

At present, many experts believe that the development of AI needs to balance innovation and security. Both businesses and governments should advance technology while ensuring specifications are precise and enforced to avoid potentially catastrophic consequences.

Nevertheless, in this long debate about humanity’s future, proponents and critics have one thing in common: how to ensure that technology works for humanity and does not threaten its survival.